About the Institute for Security and Technology

AI Loss of Control Risk

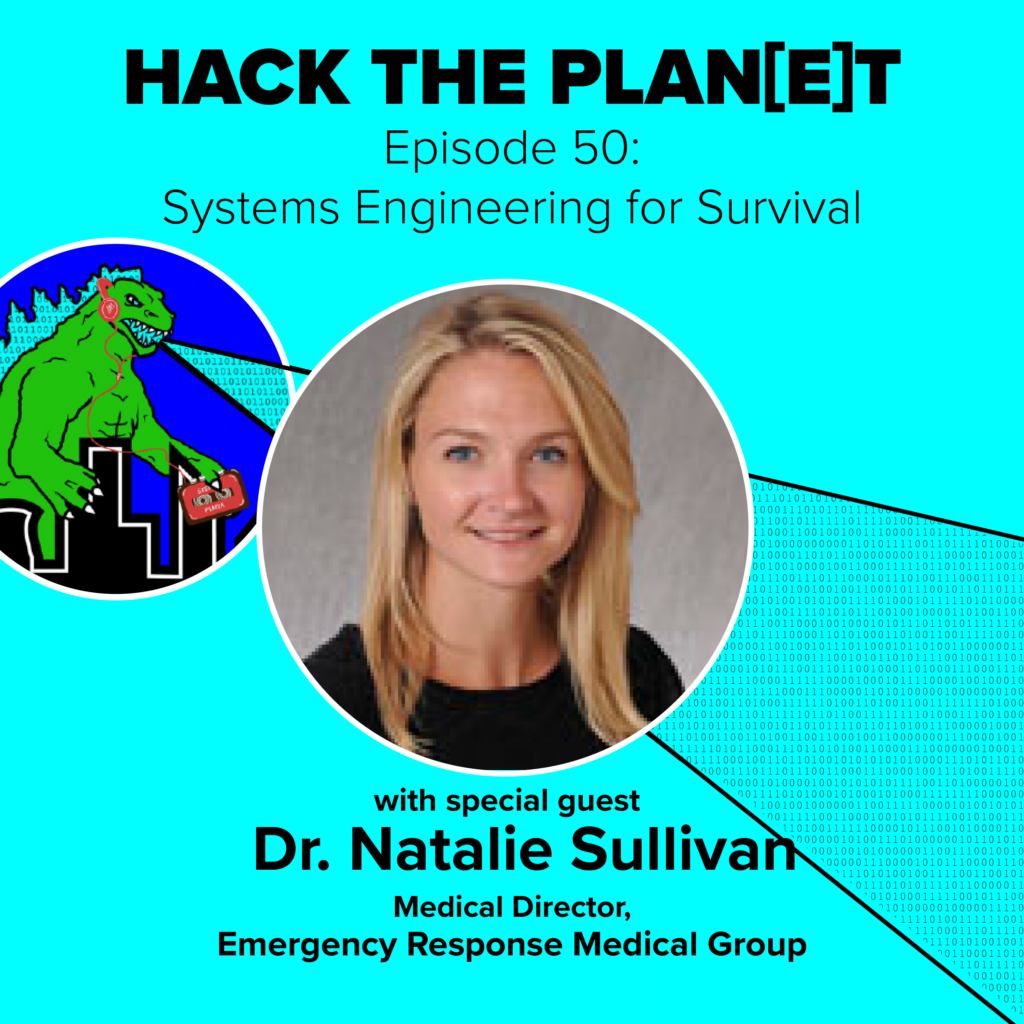

When Every Second Counts

Meet the Winners of the Third Annual Cyber Policy Awards

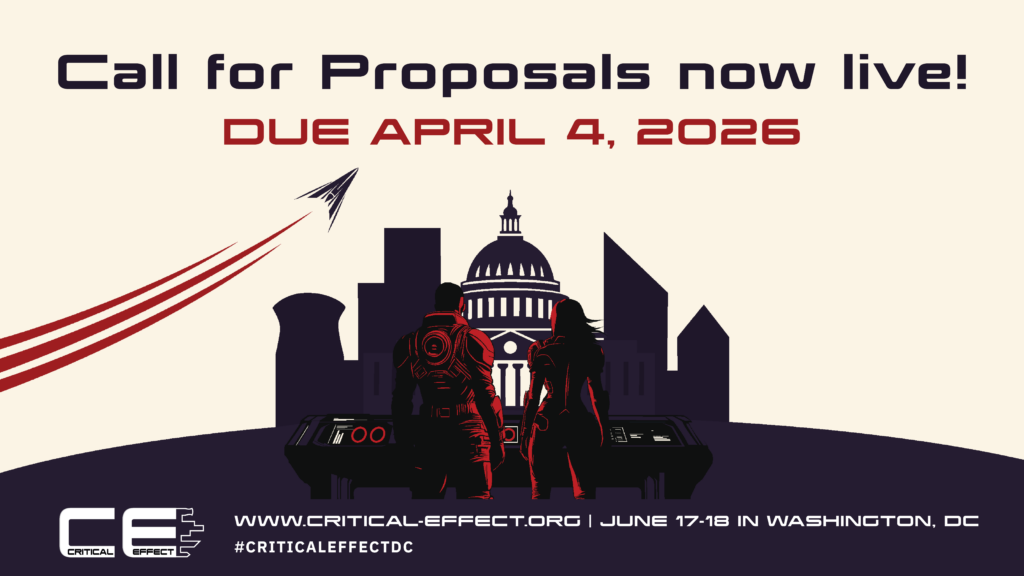

Critical Effect DC 2026

Latest News, Events & Research

Focus Areas

Our work to advance national security and global stability through technology built on trust encompasses three thematic focus areas.

GEOPOLITICS OF TECHNOLOGY

FUTURE OF DIGITAL SECURITY

Identifying vulnerabilities in modern digital infrastructures and proposing ways to build trust, safety, and security into digital technologies from the ground up.

INNOVATION & CATASTROPHIC RISK

IST By The Numbers

97 recommendations

issued by IST in 2025 urged public and private sector action and set out actionable pathways to achieve them

12 hospital communities

that UnDisruptable27 is engaging with across the country as the effort works directly with water owners and operators to overcome barriers that prevent utilities from adopting engineering solutions that can mitigate risk

70+ working groups, roundtables, and workshops

brought together policymakers, members of the private sector, and civil society experts in 2025 to address a range of topics, from cyber incident victim notification and strategic disruption of ransomware to the implications of artificial intelligence for national security and AI chips export control

3,000+ attendees

joined IST at public events in 2025, including large-scale task force gatherings, conferences, webinars, and fireside chats

Our Supporters

Get IST updates in your inbox

Subscribe to The TechnologIST, IST’s monthly newsletter highlighting major issues at the intersection of technology and security

Support IST

IST is a 501(c)(3) nonprofit organization. Our success would be unimaginable without the many foundations, organizations, government entities, and individuals who support our work.

Explore Our Research

IST regularly publishes large-scale reports, briefings, and one-pagers based on in-depth research, subject-matter working groups, surveys, and interviews.